Salt Lake City, Utah - January 27, 2026

Utah lawmakers are once again pushing into tech regulation territory, this time focusing on advanced artificial intelligence systems and public safety.

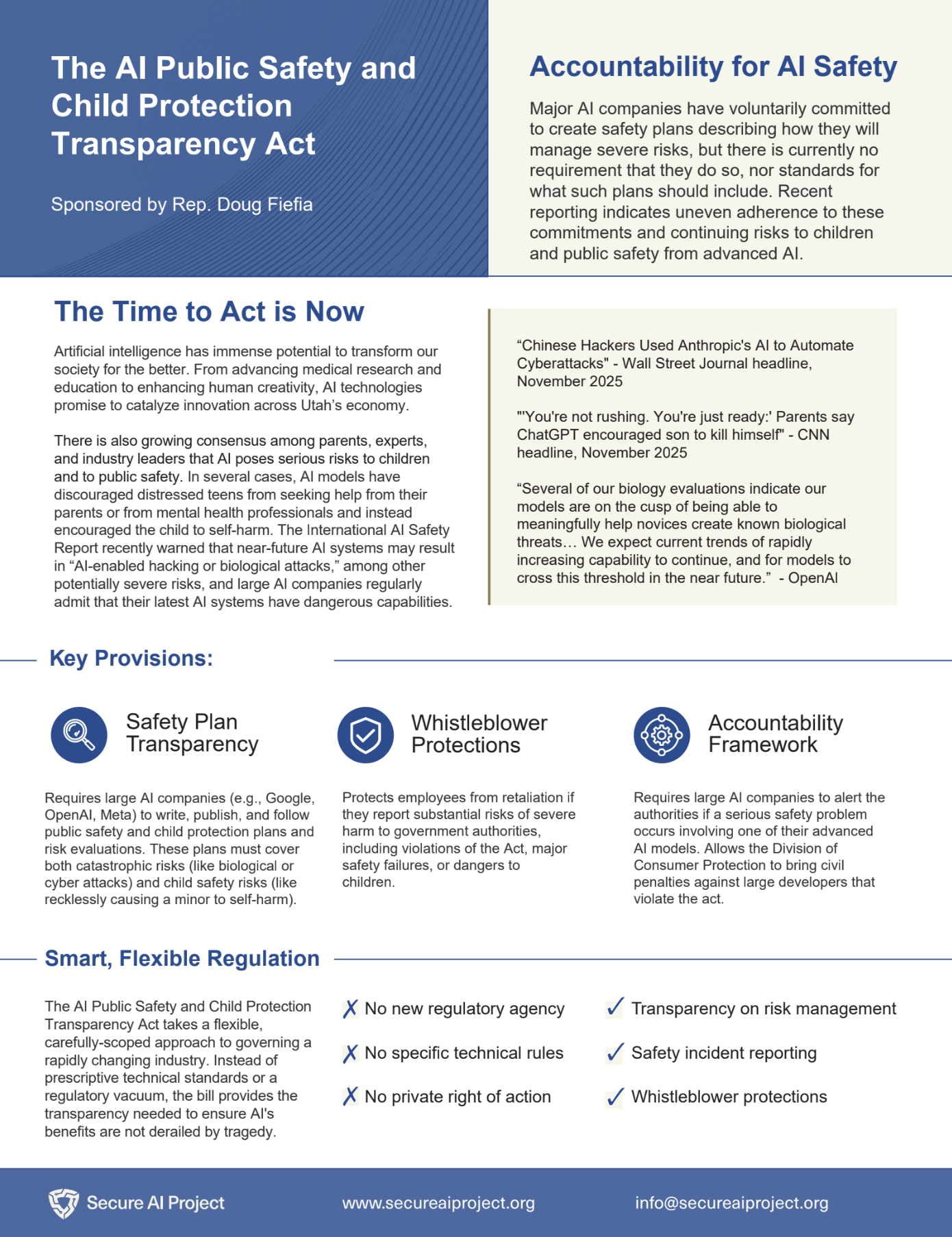

Rep. Doug Fiefia has introduced H.B. 286, the Artificial Intelligence Transparency Act, for the 2026 General Legislative Session. The bill would require the largest AI companies operating in Utah to publicly disclose how they assess and mitigate serious risks tied to their systems, including harms affecting children.

The proposal builds on Utah’s recent efforts to regulate social media platforms, signaling the state’s intent to play a larger role in shaping how powerful digital technologies are governed — even as Congress struggles to agree on national AI rules.

What the bill would require

Under H.B. 286, covered AI companies would be required to:

- Write and publish public safety and child protection plans explaining how they evaluate and mitigate severe AI-related risks

- Follow those plans in practice, rather than treating them as voluntary commitments

- Report significant AI safety incidents

- Refrain from retaliating against employees who raise internal concerns or disclose failures

Supporters argue the bill closes a regulatory gap. While many AI companies have voluntarily adopted internal safety frameworks, Utah currently does not require them to document or disclose those efforts.

The bill is designed to avoid creating a new regulatory agency or imposing prescriptive technical standards. Instead, it focuses on transparency — forcing companies to explain, in public, how they handle safety risks as AI systems become more capable and widely deployed.

A familiar strategy for Utah lawmakers

Utah has already positioned itself as a national leader in child-focused tech regulation, particularly around social media.

- In March 2023, Utah became the first U.S. state to enact laws restricting social media use by children, including parental consent requirements and age verification mandates, signaling an aggressive legislative approach to technology and child safety.

- State officials and advocacy groups have described these measures as national leadership in safeguarding youth online, framing Utah’s policies as a model for other states to follow.

H.B. 286 extends that philosophy into AI, a sector that is moving faster — and with fewer established guardrails.

Advocacy groups backing the bill say transparency alone can change company behavior.

“We applaud Rep. Fiefia for having the foresight to introduce this critical legislation,” said Andrew Doris, senior policy analyst at Secure AI Project (San Francisco), which helped shape the legislation. “It takes courage to take on big tech companies, but major risks from AI are already here and the time to act is now. We hope Utah’s legislature will show that transparency and accountability for AI harms are bipartisan concerns.”

Adam Billen, vice president of public policy at Encode AI (Washington, DC), framed the proposal as a lesson learned from social media platforms. “We’ve learned from social media that we can’t trust tech companies to voluntarily choose tocprotect our families. With families already suffering immense AI driven tragedies, we must takecsteps today to protect our children. We applaud Rep. Fiefia for again leading the way on commoncsense protections for Utahns."

Strong public support

The announcement of the bill is accompanied by a statewide survey showing broad voter concern about AI oversight. According to the survey, 90% of Utah voters support requiring AI developers to implement safety and security protocols to protect children, and 71% worry the state may not regulate AI enough.

What’s defined — and what’s still unclear

H.B. 286 draws unusually clear boundaries around both scope and enforcement. The bill applies only to “large frontier developers,” defined as companies that have trained advanced AI models using at least 10²⁶ computational operations and reported at least $500 million in annual revenue in the prior year — a threshold that limits coverage to a small group of top-tier AI model developers.

The bill gives companies flexibility to adapt to a fast-moving technology. At the same time, it leaves open questions about whether public disclosure alone can keep pace with increasingly capable AI systems — especially when failures may only become visible after harm has occurred.

Enforcement (see 13-72b-107. Civil penalty) would fall to the Utah attorney general, who could bring civil actions against companies that violate the law. The bill authorizes penalties of up to $1 million for a first violation and up to $3 million for subsequent violations. Reported AI safety incidents would be evaluated by the state’s Office of AI Policy, rather than a newly created regulatory agency. Whether those penalties and review mechanisms will be sufficient to meaningfully influence the behavior of some of the world’s largest AI companies is likely to be a central question as the bill moves forward.

What happens next

H.B. 286 is scheduled for its first major test today, Tuesday, January 27, when it will be considered by the House Economic Development and Workforce Services Standing Committee. The bill is one of several measures on the committee’s agenda, and the meeting will be livestreamed to the public through the Utah Legislature’s website.

If the bill advances out of committee, it would move closer to a full House vote — and a more substantive debate over how far states should go in holding AI developers publicly accountable for safety risks.

See one-pager about H.B. 286 from Secure AI: